VMP

scikit-learn Machine Learning in Python

Neural Network in Your Browser

Intermediate Python

Tutorials for learning Torch

Get Started with TensorFlow

scikit-learn Machine Learning in Python

Начните с TensorFlow 2.0 для новичков

Эффективный TensorFlow 2.0

Effective TensorFlow 2

tensorflow2.0-examples Jupyter notebooks to help you started with tensorflow 2.0

Deep Learning

TensorFlow 2.0 Tutorial in 10 Minutes

TensorFlow 2.0 Tutorial 01: Basic Image Classification

TensorFlow 2 Tutorial: Get Started in Deep Learning With tf.keras

TensorFlow 2 quickstart for experts

TensorFlow-2.x-Tutorials dragen1860

GitHub TensorFlow+2.0 repository results

Transformers: State-of-the-art Natural Language Processing for TensorFlow 2.0 and PyTorch. https://huggingface.co/transformers

LearningTensorFlow

Make_Money_with_Tensorflow_2.0

TensorFlow Image Classifiers on Android, Android Things, and iOS

Robust VI-SLAM and HD-Map Reconstruction for Location-based Augmented Reality

PyTorch 1.5 released, new and updated APIs including C++ frontend API parity with Python.

Welcome to PyTorch Tutorials

PyTorch tutorials. https://pytorch.org/tutorials/

PyTorch: ~40k repositories on GitHub

TensorFlow: ~80k repositories on GitHub

Magenta: Music and Art Generation with Machine Intelligence GitHub

magenta-demos GitHub

Colab Notebooks magenta

Demonstrations of Magenta Models

AI Duet

Generating Piano Music with Transformer

DDSP Timbre Transfer Demo

DDSP: Differentiable Digital Signal Processing

DDSP: Differentiable Digital Signal Processing

Нейронные сети предпочитают текстуры и как с этим бороться

Новые архитектуры нейросетей 2020

scikit-learn GitHub

Введение в Scikit-learn

Введение в машинное обучение с помощью Python и Scikit-Learn

scikit-learn

Supervised learning

Examples

Neural network models (supervised

matplotlib tutorials

matplotlib gallery

SciPy Cookbook

Python SciPy Tutorial: Learn with Example

Scipy Lecture Notes

Scipy Lecture Notes

autofig

Deep Learning: Transfer learning и тонкая настройка глубоких сверточных нейронных сетей 2016

impersonator PyTorch implementation of our ICCV 2019 paper: Liquid Warping GAN: A Unified Framework for Human Motion Imitation, Appearance Transfer and Novel View Synthesis https://svip-lab.github.io/project/im…

Liquid Warping GAN: A Unified Framework for Human Motion Imitation, Appearance Transfer and Novel View Synthesis ICCV 2019

svip-lab.github.io

Structured3D: A Large Photo-realistic Dataset for Structured 3D Modeling

Синтез изображений с помощью глубоких нейросетей — Виктор Лемпицкий 2016

Avatar Digitization From a Single Image For Real-Time Rendering (SIGGRAPH Asia 2017)

Deep Learning VK

10 Best Machine Learning Frameworks in 2020

PyTorch Tutorial: How to Develop Deep Learning Models with Python

Detecting COVID-19 in X-ray images with Keras, TensorFlow, and Deep Learning

Deep Learning Adventures – Tensorflow In Practice – Session 3

COVID – 19 Outbreak Prediction using Machine Learning | Machine Learning Training | Edureka 29 мар. 2020 г

Dragonfly Daily 17 Image segmentation with Deep Learning in Dragonfly (2020)

19 Advanced Topics with Deep Learning in Dragonfly (2020)

Future of AI/ML | Rise Of Artificial Intelligence & Machine Learning | AI and ML Training | Edureka 17 мар. 2020 г.

Marc Niethammer: “Deep Learning for Medical Image Registration”

Deep Learning and 3D Mapping

3D Deep Learning with TensorFlow 2

kaggle Faster Data Science Education

Сингулярное разложение

Нейросеть NS-CL интерпретирует сцену, требуя всего 5000 изображений для обучения

scikit-learn Machine Learning in Python

2019

Gaussian Process

Лекции по математике Мех-Мат МГУ

Лекции на Мат-Мехе СПбГУ

Deep Learning на пальцах

A list of ICs and IPs for AI, Machine Learning and Deep Learning

scikit-learn Machine Learning in Python

Основы байесовского вывода

Neural Network in Your Browser

Intermediate Python

Tutorials for learning Torch

Get Started with TensorFlow

Google AI Publication database

mit-deep-learning-book-pdf

Deep Bayes в глубинном обучении 2017

PyCharm for Anaconda

deep-learning-specialization-coursera

Deep Learning Specialization by Andrew Ng on Coursera

Специализация Глубокое обучение Deep Learning Specialization. Master Deep Learning, and Break into AI

music21 5.7.0 pip install music21

What is music21?

music21 Documentation

AI, практический курс. Музыкальная трансформация на основе эмоций 2018

25 полезных опенсорсных проектов в сфере машинного обучения

Robust VI-SLAM and HD-Map Reconstruction for Location-based Augmented Reality guofeng-zhang

scikit-learn GitHub

Введение в Scikit-learn

Введение в машинное обучение с помощью Python и Scikit-Learn

scikit-learn

Supervised learning

Examples

Neural network models (supervised

matplotlib tutorials

matplotlib gallery

SciPy Cookbook

Python SciPy Tutorial: Learn with Example

Scipy Lecture Notes

Scipy Lecture Notes

autofig

Speech and Language Processing (3rd ed. draft)

Structured Matrix Computations from Structured TensorsLecture 3. The Tucker and Tensor Train Decompositions Charles F. Van Loan

Q. ZHAOet al.TENSOR RING DECOMPOSITION1Tensor Ring Decomposition

A Randomized Tensor Train Singular Value Decomposition

The Tensor-Train Format and Its Applications

Тензорные разложения и их применения. Лекция в Яндексе

MATRIX Computation Golub

Введение в тензорные разложенияи их приложения

Тензоризованные нейронные сети

JMLR: Workshop and Conference Proceedings vol 49:1–31, 2016On the Expressive Power of Deep Learning: A Tensor Analysis

EXPRESSIVE POWER OF RECURRENT NEURAL NET-WORKS

Иван Оселедец, Сколковский институт науки и технологий1Математика нейронных сетей

Быстрый метод Мультиполей

Сингулярное разложение

Сингулярное разложение

Transfer learning

Transfer Learning Tutorial

Transfer Learning Using Pretrained ConvNets

The State of Transfer Learning in NLP

An Introduction to Transfer Learning in Machine Learning

Transfer Learning Introduction

Transfer learning for deep learning

A Gentle Introduction to Transfer Learning for Deep Learning

Transfer Learning

Transfer Learning with Keras and Deep Learning

Autograd mechanics Excluding subgraphs from backward

Transfer Learning: как быстро обучить нейросеть на своих данных

Реализация Transfer learning с библиотекой Keras 2018

Python: метапрограммирование в продакшене. Часть первая

Python: метапрограммирование в продакшене. Часть вторая

-https://www.youtube.com/watch?v=Ui1KbmutX0k

Transfer Learning

Attention based networks

attention-model

Attention mechanism Implementation for Keras

Activation Maps (Layers Outputs) and Gradients in Keras

How to Develop an Encoder-Decoder Model with Attention for Sequence-to-Sequence Prediction in Keras

attention-mechanism

attention

Text classifier for Hierarchical Attention Networks for Document Classification

SplineCNN: Fast Geometric Deep Learning with Continuous B-Spline Kernels

Geometric Deep Learning Extension Library for PyTorch https://pytorch-geometric.readthedocs

PyTorch Geometric Documentation

LeNet-5 in 9 lines of code using Keras

Туториал: перенос стиля изображений с TensorFlow 2019

Как конвертировать модель из TensorFlow в PyTorch 2019

TensorFlow is an end-to-end open source platform for machine learning

Google AI представила библиотеку TensorNetwork для эффективных вычислений в квантовых системах

Deep_Learning_AI GitHub

Google объявила о выходе TensorFlow 2.0

Tensorpack: быстрый интерфейс для обучения нейросетей на TensorFlow

TensorFlow Model Optimization Toolkit — float16 quantization halves model size

Машинное обучение Сергей Николенко

Sequence To Sequence Attention Models In DyNet

attention_networks.pdf

Effective Approaches to Attention-based Neural Machine Tr anslation pdf

A Brief Overview of Attention Mechanism

What is exactly the attention mechanism introduced to RNN (recurrent neural network)?

A Beginner’s Guide to Attention Mechanisms and Memory Networks

Attention and Memory in Deep Learning and NLP

Механизмы внимания в нейронных сетях – MachineLearning.ru

Tensorflow implementation of attention mechanism for text classification tasks

How to Use Word Embedding Layers for Deep Learning with Keras

Нейронный машинный перевод с применением GPU. Вводный курс. Часть 1

Нейронный машинный перевод с применением GPU. Вводный курс. Часть 2

Нейронный машинный перевод с применением GPU. Вводный курс. Часть 3

Install TensorFlow

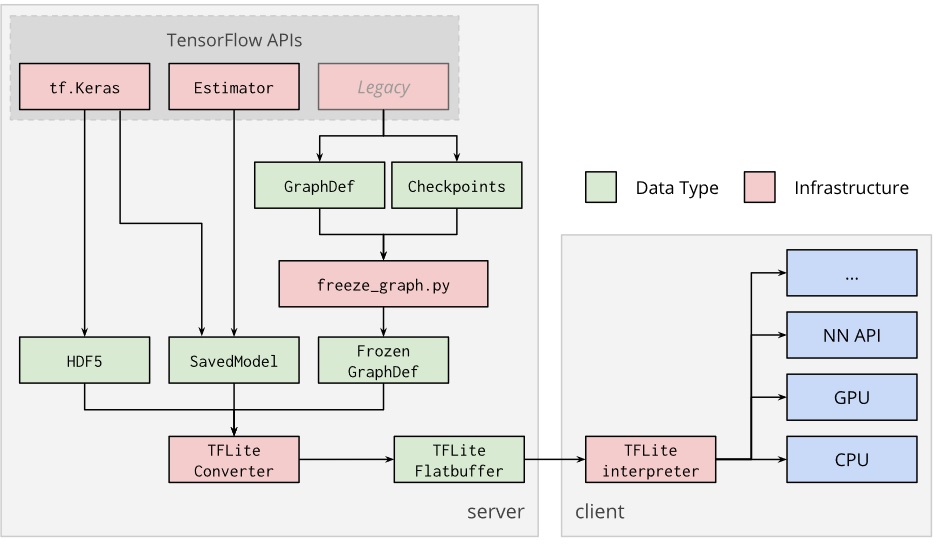

TensorFlow Lite is for mobile and embedded devices

Introduction to TensorFlow Lite

TensorFlow For Poets

TensorFlow for Poets 2: TFLite Android

TensorFlow for Poets 2: TFLite iOS

TensorFlow Lite guide

TensorFlow 2.0 Beta

Convert Your Existing Code to TensorFlow 2.0

Upgrade code to TensorFlow 2.0

TensorFlow 2.0 Beta is available

The Keras Functional API in TensorFlow

Get Started with TensorFlow

LSTM using TensorFlow 2 with embeddings

Effective TensorFlow 2.0

Standardizing on Keras: Guidance on High-level APIs in TensorFlow 2.0

Tensorflow 2.0 Keras is training 4x slower than 2.0 Estimator

TensorFlow Graphics: Differentiable Graphics Layers for TensorFlow

Introducing TensorFlow Graphics: Computer Graphics Meets Deep Learning

AutoML нейросеть от MIT обучается в 14 раз быстрее state-of-the-art

Нейросеть генерирует 3D-модель из наброска объекта

AutoML: The Next Wave of Machine Learning

AutoML and AutoDL : Simplified

AutoML

EfficientNet: как масштабировать нейросеть с использованием AutoML

Автоматическое обучение машин

Туториал по использованию AutoML в H2O.ai для автоматизации подбора гиперпараметров модели

AutoML: Automatic Machine Learning

BigBiGAN: новый state-of-the-art подход в обучении представлений

Генеративно-состязательная нейросеть (GAN). Руководство для новичков

osnovy-data-science Базовый курс

Градиентый бустинг — просто о сложном

DeepMind опубликовали библиотеку для RL экспериментов

Популярность PyTorch в среднем выросла на 243% за год

Обучение Inception-v3 распознаванию собственных изображений

Распознавание изображений предобученной моделью Inception-v3 c Python API на CPU

Google опубликовали финальную версию Tensorflow 2.0

CORnet-S: нейросеть моделирует работу мозга при распознавании объектов

Обзор пакетов для визуализации данных на Python

Реализация Transfer learning с библиотекой Keras

Сверточная нейронная сеть на PyTorch: пошаговое руководство

Туториал по PyTorch: от установки до готовой нейронной сети

Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning

Как выбрать графический процессор для глубокого обучения

Nvidia-RTX-2070-Super-vs-Nvidia-RTX-2070

Nvidia-RTX-2070-Super-vs-Nvidia-RTX-2080-Super

Nvidia-RTX-2070-Super-vs-Nvidia-RTX-2080

Compare/Nvidia-RTX-2080-Ti-vs-Nvidia-RTX-2060-6GB

Compare Nvidia-GTX-1070-Ti-vs-Nvidia-GTX-1080-Ti

Compare Nvidia-GTX-1060-6GB-vs-Nvidia-RTX-2060-6GB

Compare Nvidia-RTX-2080-vs-Nvidia-RTX-2060-6GB

Compare Nvidia-RTX-2080-vs-Nvidia-RTX-2070

Compare Nvidia-RTX-2070-vs-Nvidia-RTX-2060-6GB

Compare AMD-Radeon-VII-vs-Nvidia-RTX-2070

Compare AMD-Radeon-VII-vs-Nvidia-RTX-2060-6GB

Compare AMD-Radeon-VII-vs-Nvidia-RTX-2080

Zotac ZOTAC GAMING GeForce RTX 2060 6GB 6.0 GB High End видеокарта

Gigabyte GeForce RTX 2070 Gaming OC 8G 8.0 GB OC Enthusiast видеокарта

ZOTAC GeForce RTX 2080 Blower 8.0 GB Enthusiast видеокарта

Introducing Swift For TensorFlow

Swift for TensorFlow Project Home Page https://www.tensorflow.org/swift

Models and examples built with TensorFlow

TensorFlow Datasets

Datasets

A collection of datasets ready to use with TensorFlow

tensorflow

tensorflow An Open Source Machine Learning Framework for Everyone https://tensorflow.org

Изучай и применяй методы машинного обучения

Обучи свою первую нейросеть: простой классификатор

Use a TensorFlow Lite model for inference with ML Kit on Android

ML Kit for Firebase

Add Firebase to Your Android Project

Probabilistic Programming in Python: Bayesian Modeling and Probabilistic Machine Learning with Theano https://docs.pymc.io/

Probabilistic Programming in Python

Finding Bayesian Legos-Part 1

Finding Bayesian Legos — Part 2

How to use Normal constructor correctly in pymc3? The problem is solved by removing theano 1.0.3 from Anaconda and performing pip install the latest version >=1.0.4.

Tutorial Notebooks PyMC3

Example Notebooks

GAN из изображения человека синтезирует видео с ним

Нейросеть обучена выявлять рак легких по томографическим снимкам

Нейросеть оказалась точнее 101 радиолога в диагностировании рака груди

FUNIT: нейросеть для image-2-image трансформаций от NVIDIA

В Оксфорде обучили self-supervised метод для сегментирования объектов на видео

Mesh R-CNN: нейросеть, которая моделирует 3D форму объектов

Нейросеть 3D-BoNet сегментирует объекты на 3D изображениях

Reasoning-RCNN: нейросеть распознает объекты из тысяч категорий

GitHub MR analysis

MRI analysis using PyTorch and MedicalTorch

Python code explaining how to display structural and functional fMRI data. https://medium.com/coinmonks/visualizing-brain-imaging-data-fmri-with-python-e1d0358d9dba

Intro to Analyzing Brain Imaging Data— Part I: fMRI Data Structure

List of ressources and practicals used for the fMRI data analysis course of UoB (UK) (2014-2017)

Design MR Java program to load data into Hadoop. Clean data using Pig. Interact with data by Hive, and build recommender system by collaborative filtering

Coding examples from Statiscal Analysis of fMRI data by F. Gregory Ashby

Нейросеть создает МРТ-снимки мозга для тренировки алгоритмов диагностики

GitHub MR image analysis

Analysis of brain MRI images through computer vision techniques: segmentation and enhancement techniques.

Analysis of brain MRI images through computer vision techniques: segmentation and enhancement techniques

Prostate-Cancer-Diagnosis-using-MRI

MRI_image_analysis_keras

MRI-Image-Analysis

A Numerical Magnetic Resonance Imaging (MRI) Simulation Platform https://leoliuf.github.io/MRiLab/

Quantitative Magnetic Resonance Imaging Made Easy with qMRLab: a Software for Data Simulation, Analysis and Visualization https://qmrlab.org

Magnetic resonance imaging

Small Animal Magnetic Resonance Imaging via Python.

qMRLab in a Jupyter Notebook: A tutorial for Win10 users

Quantitative MRI. Under one umbrella.

Research at NVIDIA: Medical Image Synthesis for Data Augmentation and Anonymization Using GANs

A simple way to share Jupyter Notebooks

Plotly’s Python API User Gui

Plotly User Guide in Python

Gaussian processes framework in python

GPy is a Gaussian Process (GP) framework written in Python, from the Sheffield machine learning group

GPy is a Gaussian Process (GP) framework written in python, from the Sheffield machine learning group.

Here are a few notebooks outlining basic functionality of GPy

DEEP NEURAL NETWORKS AS GAUSSIANP ROCESSES PDF

Gaussian Process Behaviour in Wide Deep Neural Networks PDF

BAYESIAN DEEP CONVOLUTIONAL NETWORKS WITH MANY CHANNELS ARE GAUSSIAN PROCESSES

Deep Neural Networks as Gaussian Processes

Gaussian Process Behaviour in Wide Deep Neural Networks

A Hierarchical Latent Vector Model for Learning Long-Term Structure in Music

A Hierarchical Latent Vector Model for Learning Long-Term Structure in Music

Magenta: Music and Art Generation with Machine Intelligence

Generating Music and Lyrics using Deep Learning via Long Short-Term Recurrent Networks (LSTMs). Implements a Char-RNN in Python using TensorFlow.

Using Long Short-Term Memory neural networks to generate music

Music generation from midi files based on Long Short Term Memory

Using Long Short Term Memory Keras model with additional libraries to generate Metallica style music

StructLSTM – Structure augmented Long-Short Term Memory Networks for Music Generation

Generating Music and Lyrics using Deep Learning via Long Short-Term Recurrent Networks (LSTMs). Implements a Char-RNN in Python using TensorFlow.

In-depth Summary of Facebook AI’s Music Translation Model

happier: hierarchical polyphonic music generative rnn – OpenReview

Model for Learning Long-Term Structure in Music

Learning a Latent Space of Multitrack Measures – Machine Learning

MusicVAE: Creating a palette for musical scores with machine learning

Awesome-Deep-Learning-Resources

awesome-deeplearning-resources

pytorch.org

Comparison of deep learning software

Benchmarking CNTK on Keras: is it Better at Deep Learning than TensorFlow?

Microsoft Cognitive Toolkit (CNTK), an open source deep-learning toolkit

Get Started with TensorFlow

Setup CNTK on your machine

Using CNTK with Keras (Beta)

Installing CNTK for Python on Windows

Microsoft Cognitive Toolkit (CNTK), an open source deep-learning toolkit

CNTK Examples

A Microsoft CNTK tutorial in Python – build a neural network

Microsoft Cognitive Toolkit (CNTK) Resources

Model Gallery

Notebooks to on-board with CNTK

Почему CNTK?

машинное обучение в медицине на примере CNTK от Microsoft

Microsoft Cognitive Toolkit (CNTK): руководство для начала работы с библиотекой

CNTK — нейросетевой инструментарий от Microsoft Research

Python API for CNTK Examples

Python API for CNTK (2.6)

Искусственный интеллект и нейросети для .NET-разработчиков

Azure Machine Learning: разработка сервисов машинного обучения и их использование в мобильном приложении

NVIDIA DeepDeep Learning

PyTorch — ваш новый фреймворк глубокого обучения

Deep Learning with Torch: the 60-minute blitz

Keras: The Python Deep Learning library

Keras backends

Configuring GPU Accelerated Keras in Windows 10

Doc theano

Keras Available models Models for image classification with weights trained on ImageNet:

HDF5 for Python

Как использовать HDF5-файлы в Python

How to use HDF5 files in Python HDF5 allows you to store large amounts of data efficiently

HDF5 for Python

How to Use The Pre-Trained VGG Model to Classify Objects in Photographs

Practical Deep Learning for Coders

Keras Tutorial : Using pre-trained Imagenet models

Keras: The Python Deep Learning library

Python API for CNTK (2.6)

Rules of Machine Learning: Best Practices for ML Engineering

Deep Learning: Распознавание сцен и достопримечательностей на изображениях

ТОП-10 мировых публикаций по машинному обучению за апрель 2018

The fall of RNN / LSTM

Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Deep Residual Learning for Image Recognition

Новый подход в Deep Learning: популяционное обучение нейросетей

10 рецептов машинного обучения от разработчиков Google

Взгляд на основные тенденции в машинном обучении

Open Images Dataset V4 + Extensions

How to implement a YOLO (v3) object detector from scratch in PyTorch: Part 1

Keras and Convolutional Neural Networks (CNNs)

ROCmSoftwarePlatform/pytorch

Radeon ROCm 2.0 Officially Out With OpenCL 2.0 Support, TensorFlow 1.12, Vega 48-bit Virtual Addressing

ROCm Platform Installation Guide for Linux Current ROCm Version: 2.0

ROCmSoftwarePlatform MIOpen AMD’s Machine Intelligence Library

MIOpen AMD’s library for high peformance machine learning primitives. MIOpen supports two programming models-OpenCL, HIP

BLAS implementation for ROCm platform BLAS=Basic Linear Algebra Subprograms ROCm = Radeon Open Compute platform

Exploring AMD Vega for Deep Learning

TVM is an open deep learning compiler stack for CPUs, GPUs, and specialized accelerators

hipCaffe: the HIP port of Caffe

ROCm Software Platform

SOPHON Artificial Intelligence Server SA3

Tensor Processing Unit

Edge TPU BM1880

BM1880 EDB Software

Особенности платы для разработки 96Boards AI Sophon Edge с SoC Bitmain BM1880 ASIC

Compare Nvidia-RTX-2080-Ti-vs-Nvidia-RTX-2070

Compare Nvidia-GTX-1070-Ti-vs-Nvidia-Titan-RTX

Compare Nvidia-RTX-2080-Ti-vs-AMD-RX-Vega-64

Compare Nvidia-RTX-2080-Ti-vs-Nvidia-GTX-1070-Ti

Compare Nvidia-GTX-1080-Ti-vs-AMD-RX-Vega-64

Compare Nvidia-GTX-1080-Ti-vs-Nvidia-GTX-1070-Ti

Compare Nvidia-GTX-1080-vs-AMD-RX-Vega-64

Compare AMD-RX-Vega-56-vs-AMD-RX-Vega-64

Compare Nvidia-GTX-1070-Ti-vs-AMD-RX-Vega-64

Compare Nvidia-GTX-1070-Ti-vs-AMD-RX-Vega-56

Compare Nvidia-GTX-1070-Ti-vs-Nvidia-GTX-1080-Ti

Compare Nvidia-GTX-1070-Ti-vs-Nvidia-GTX-1080

Compare Nvidia-GTX-1070-vs-Nvidia-GTX-1080

Compare Nvidia-GTX-1070-vs-Nvidia-GTX-1070-Ti

Compare Nvidia-GTX-1070-vs-AMD-RX-Vega-56

gpu.userbenchmark.com

Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning

Benchmark CIFAR10 on TensorFlow with ROCm on AMD GPUs vs CUDA9 and cuDNN7 on NVIDIA GPUs

CIFAR10

Normalized Raw Performance Data of GPUs and TPU. Higher is better. An RTX 2080 Ti is about twice as fast as a GTX 1080 Ti: 0.77 vs 0.4.

Benchmarks: Deep Learning Nvidia P100 vs. V100 GPU

Normalized performance/cost numbers for convolutional networks (CNN), recurrent networks (RNN) and Transformers. Higher is better. An RTX 2070 is more than 5 times more cost-efficient than a Tesla V100.

Exploring AMD Vega for Deep Learning

Building a 50 Teraflops AMD Vega Deep Learning Box for Under $3K

Easy benchmarking of all publicly accessible implementations of convnets

Deep Learning Benchmark for comparing the performance of DL frameworks, GPUs, and single vs half precision

Deep Learning Benchmark Results (RTX 2080 TI vs. RTX 2070)

Facebook открыл код платформы Detectron для распознавания объектов на фотографиях

Распознавание образов для программистов

Grid LSTM

tensorflow-grid-lstm

lstm-neural-networks

Grid LSTM – UvA Deep Learning Course

Tensorflow Grid LSTM RNN

Examples of using GridLSTM (and GridRNN in general) in tensorflow

MusicVAE: A Hierarchical Latent Vector Model for Learning Long-Term Structure in Music.

Modeling Time-Frequency Patterns with LSTM vs

Torch7 implementation of Grid-LSTM as described here: http://arxiv.org/pdf/1507.01526v2.pdf

Grid Long Short-Term Memory

Neural Network Right Here in Your Browser

Recurrent Neural Network – A curated list of resources dedicated to RNN

Generation of poems with a recurrent neural network

Generating Poetry using Neural Networks

Chinese Poetry Generation with Recurrent Neural Networks

Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing , pages 1919–1924, Lisbon, Portugal, 17-21 September 2015. c © 2015 Association for Computational Linguistics. GhostWriter: Using an LSTM for Automatic Rap Lyric Generation

Generating Sentences from a Continuous Space

PoetRNN A python framework for learning and producing verse poetry

Как научить свою нейросеть генерировать стихи

КлассикAI жанра: ML ищет себя в поэзии

github.com/facebookresearch

deep learning v: рекуррентные сети

Нейросеть сочинила стихи в стиле «Нирваны»

Comparison of deep learning software

Neural Network Right Here in Your Browser

TensorFlow на AWS Удобные возможности для глубокого обучения в облаке. 85% проектов TensorFlow в облачной среде выполняются в AWS.

Deep Learning on ROCm

GitHub Facebook Research

nevergrad A Python toolbox for performing gradient-free optimization

On word embeddings – Part 1

On word embeddings – Part 2: Approximating the Softmax

On word embeddings – Part 3: The secret ingredients of word2vec

A survey of cross-lingual word embedding models

Word embeddings in 2017: Trends and future directions

Keras: The Python Deep Learning library

Deep Learning for humans http://keras.io/

Keras examples directory

Стихи.ру – российский литературный портал

github IlyaGusev

Библиотека для анализа и генерации стихов на русском языке

Морфологический анализатор на основе нейронных сетей и pymorphy2

Задание по курсу NLP

Поэтический корпус русского языка http://poetry-corpus.ru/

Code inspired by Unsupervised Machine Translation Using Monolingual Corpora Only

Open Source Neural Machine Translation in PyTorch http://opennmt.net/

A library for Multilingual Unsupervised or Supervised word Embeddings

PyText A natural language modeling framework based on PyTorch https://fb.me/pytextdocs

RusVectōrēs: семантические модели для русского языка

Sberbank AI

Классик AI: Cоревнование по стихотворному Искуственному Интеллекту

Программа MyStem производит морфологический анализ текста на русском языке. Она умеет строить гипотетические разборы для слов, не входящих в словарь.

A Python wrapper of the Yandex Mystem 3.1 morphological analyzer (http://api.yandex.ru/mystem)

gensim

NTLK Natural Language Toolkit

nltk windows

rusvectores

Национальный корпус русского языка

Flask

Морфологическая разметка с использование обширного описания языка

A receiver operating characteristic curve, i.e., ROC curve

Neural Style Transfer: Creating Art with Deep Learning using tf.keras and eager execution

TF Jam — Shooting Hoops with Machine Learning

Introducing TensorFlow.js: Machine Learning in Javascript

Standardizing on Keras: Guidance on High-level APIs in TensorFlow 2.0

PyText Documentation

PyText A natural language modeling framework based on PyTorch https://fb.me/pytextdocs

Recurrent Neural Network – A curated list of resources dedicated to RNN

Generation of poems with a recurrent neural network

Generating Poetry using Neural Networks

Chinese Poetry Generation with Recurrent Neural Networks

Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing , pages 1919–1924, Lisbon, Portugal, 17-21 September 2015. c © 2015 Association for Computational Linguistics. GhostWriter: Using an LSTM for Automatic Rap Lyric Generation

Generating Sentences from a Continuous Space

PoetRNN A python framework for learning and producing verse poetry

Language modeling a billion words

Deep Learning with Torch: the 60-minute blitz

NNGraph A graph based container for creating deep learning models

Tutorials for learning Torch

Как научить свою нейросеть генерировать стихи

КлассикAI жанра: ML ищет себя в поэзии

github.com/facebookresearch

deep learning v: рекуррентные сети

Нейросеть сочинила стихи в стиле «Нирваны»

Building TensorFlow on Android

Building TensorFlow on iOS

Как использовать TensorFlow Mobile в приложениях для Android

TensorFlow Lite is for mobile and embedded devices

TensorFlow Lite versus TensorFlow Mobile

tensorflow/tensorflow/contrib/lite/

github tensorflow

Android TensorFlow Lite Machine Learning Example

After a TensorFlow model is trained, the TensorFlow Lite converter uses that model to generate a TensorFlow Lite FlatBuffer file (.tflite). The converter supports as input: SavedModels, frozen graphs (models generated by freeze_graph.py), and tf.keras HDF5 models. The TensorFlow Lite FlatBuffer file is deployed to a client device (generally a mobile or embedded device), and the TensorFlow Lite interpreter uses the compressed model for on-device inference.

TF Lite Developer Guide

TensorFlow for Poets 2: TFLite iOS

TensorFlow for Poets 2: TFLite Android

TensorFlow for Poets 2: TFMobile

Tinker With a Neural Network Right Here in Your Browser

TensorFlow Lite Optimizing Converter command-line examples

Differences between L1 and L2 as Loss Function and Regularization

L1 and L2 Regularization

Регуляризация

L1- и L2-регуляризация в машинном обучении

L 1 -регуляризациялинейнойрегрессии. Регрессиянаименьшихуглов(алгоритмLARS)

L1 и L2 регуляризации для линейной регрессии

L1 и L2-регуляризация для логистической регрессии

Обработка естественных языков на языке Python

Практическое глубокое обучение в Theano и TensorFlow

TensorFlow For Poets

Get Started with TensorFlow

Train your own image classifier with Inception in TensorFlow

Google Developers

awesome-deep-learning-papers

awesome-deep-learning2

Deep Learning An MIT Press book

deep learning coursera

Artificial Intelligence: A Modern Approach

Docker container for NeuralTalk

Как и для чего использовать Docker

введение в Docker с нуля. Ваш первый микросервис

LSTM – сети долгой краткосрочной памяти

The Khronos Group

Khronos royalty-free open standards for 3D graphics, Virtual and Augmented Reality, Parallel Computing, Neural Networks, and Vision Processing

характеристики CPU AMD Ryzen 3000: флагманская модель Ryzen 9 3850X предложит 16 ядер и частоту 5,1 ГГц

NeuralTuringMachine

Oxford Deep NLP 2017 course

A Stable Neural-Turing-Machine (NTM) Implementation (Source Code and Pre-Print)

A series of models applying memory augmented neural networks to machine translation

Newly published papers (< 6 months) which are worth reading

- MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications (2017), Andrew G. Howard et al. [pdf]

- Convolutional Sequence to Sequence Learning (2017), Jonas Gehring et al. [pdf]

- A Knowledge-Grounded Neural Conversation Model (2017), Marjan Ghazvininejad et al. [pdf]

- Accurate, Large Minibatch SGD:Training ImageNet in 1 Hour (2017), Priya Goyal et al. [pdf]

- TACOTRON: Towards end-to-end speech synthesis (2017), Y. Wang et al. [pdf]

- Deep Photo Style Transfer (2017), F. Luan et al. [pdf]

- Evolution Strategies as a Scalable Alternative to Reinforcement Learning (2017), T. Salimans et al. [pdf]

- Deformable Convolutional Networks (2017), J. Dai et al. [pdf]

- Mask R-CNN (2017), K. He et al. [pdf]

- Learning to discover cross-domain relations with generative adversarial networks (2017), T. Kim et al. [pdf]

- Deep voice: Real-time neural text-to-speech (2017), S. Arik et al., [pdf]

- PixelNet: Representation of the pixels, by the pixels, and for the pixels (2017), A. Bansal et al. [pdf]

- Batch renormalization: Towards reducing minibatch dependence in batch-normalized models (2017), S. Ioffe. [pdf]

- Wasserstein GAN (2017), M. Arjovsky et al. [pdf]

- Understanding deep learning requires rethinking generalization (2017), C. Zhang et al. [pdf]

- Least squares generative adversarial networks (2016), X. Mao et al. [pdf]

- Old Papers : Before 2012

- HW / SW / Dataset : Technical reports

- Book / Survey / Review

- Video Lectures / Tutorials / Blogs

- Appendix: More than Top 100 : More papers not in the list

Understanding / Generalization / Transfer

- Distilling the knowledge in a neural network (2015), G. Hinton et al. [pdf]

- Deep neural networks are easily fooled: High confidence predictions for unrecognizable images (2015), A. Nguyen et al. [pdf]

- How transferable are features in deep neural networks? (2014), J. Yosinski et al. [pdf]

- CNN features off-the-Shelf: An astounding baseline for recognition (2014), A. Razavian et al. [pdf]

- Learning and transferring mid-Level image representations using convolutional neural networks (2014), M. Oquab et al. [pdf]

- Visualizing and understanding convolutional networks (2014), M. Zeiler and R. Fergus [pdf]

- Decaf: A deep convolutional activation feature for generic visual recognition (2014), J. Donahue et al. [pdf]

Optimization / Training Techniques

- Training very deep networks (2015), R. Srivastava et al. [pdf]

- Batch normalization: Accelerating deep network training by reducing internal covariate shift (2015), S. Loffe and C. Szegedy [pdf]

- Delving deep into rectifiers: Surpassing human-level performance on imagenet classification (2015), K. He et al. [pdf]

- Dropout: A simple way to prevent neural networks from overfitting (2014), N. Srivastava et al. [pdf]

- Adam: A method for stochastic optimization (2014), D. Kingma and J. Ba [pdf]

- Improving neural networks by preventing co-adaptation of feature detectors (2012), G. Hinton et al. [pdf]

- Random search for hyper-parameter optimization (2012) J. Bergstra and Y. Bengio [pdf]

Unsupervised / Generative Models

- Pixel recurrent neural networks (2016), A. Oord et al. [pdf]

- Improved techniques for training GANs (2016), T. Salimans et al. [pdf]

- Unsupervised representation learning with deep convolutional generative adversarial networks (2015), A. Radford et al. [pdf]

- DRAW: A recurrent neural network for image generation (2015), K. Gregor et al. [pdf]

- Generative adversarial nets (2014), I. Goodfellow et al. [pdf]

- Auto-encoding variational Bayes (2013), D. Kingma and M. Welling [pdf]

- Building high-level features using large scale unsupervised learning (2013), Q. Le et al. [pdf]

Convolutional Neural Network Models

- Rethinking the inception architecture for computer vision (2016), C. Szegedy et al. [pdf]

- Inception-v4, inception-resnet and the impact of residual connections on learning (2016), C. Szegedy et al. [pdf]

- Identity Mappings in Deep Residual Networks (2016), K. He et al. [pdf]

- Deep residual learning for image recognition (2016), K. He et al. [pdf]

- Spatial transformer network (2015), M. Jaderberg et al., [pdf]

- Going deeper with convolutions (2015), C. Szegedy et al. [pdf]

- Very deep convolutional networks for large-scale image recognition (2014), K. Simonyan and A. Zisserman [pdf]

- Return of the devil in the details: delving deep into convolutional nets (2014), K. Chatfield et al. [pdf]

- OverFeat: Integrated recognition, localization and detection using convolutional networks (2013), P. Sermanet et al. [pdf]

- Maxout networks (2013), I. Goodfellow et al. [pdf]

- Network in network (2013), M. Lin et al. [pdf]

- ImageNet classification with deep convolutional neural networks (2012), A. Krizhevsky et al. [pdf]

Image: Segmentation / Object Detection

- You only look once: Unified, real-time object detection (2016), J. Redmon et al. [pdf]

- Fully convolutional networks for semantic segmentation (2015), J. Long et al. [pdf]

- Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks (2015), S. Ren et al. [pdf]

- Fast R-CNN (2015), R. Girshick [pdf]

- Rich feature hierarchies for accurate object detection and semantic segmentation (2014), R. Girshick et al. [pdf]

- Spatial pyramid pooling in deep convolutional networks for visual recognition (2014), K. He et al. [pdf]

- Semantic image segmentation with deep convolutional nets and fully connected CRFs, L. Chen et al. [pdf]

- Learning hierarchical features for scene labeling (2013), C. Farabet et al. [pdf]

Image / Video / Etc

- Image Super-Resolution Using Deep Convolutional Networks (2016), C. Dong et al. [pdf]

- A neural algorithm of artistic style (2015), L. Gatys et al. [pdf]

- Deep visual-semantic alignments for generating image descriptions (2015), A. Karpathy and L. Fei-Fei [pdf]

- Show, attend and tell: Neural image caption generation with visual attention (2015), K. Xu et al. [pdf]

- Show and tell: A neural image caption generator (2015), O. Vinyals et al. [pdf]

- Long-term recurrent convolutional networks for visual recognition and description (2015), J. Donahue et al. [pdf]

- VQA: Visual question answering (2015), S. Antol et al. [pdf]

- DeepFace: Closing the gap to human-level performance in face verification (2014), Y. Taigman et al. [pdf]:

- Large-scale video classification with convolutional neural networks (2014), A. Karpathy et al. [pdf]

- Two-stream convolutional networks for action recognition in videos (2014), K. Simonyan et al. [pdf]

- 3D convolutional neural networks for human action recognition (2013), S. Ji et al. [pdf]

- Pixel recurrent neural networks (2016), A. Oord et al. [pdf]

- Improved techniques for training GANs (2016), T. Salimans et al. [pdf]

- Unsupervised representation learning with deep convolutional generative adversarial networks (2015), A. Radford et al. [pdf]

- DRAW: A recurrent neural network for image generation (2015), K. Gregor et al. [pdf]

- Generative adversarial nets (2014), I. Goodfellow et al. [pdf]

- Auto-encoding variational Bayes (2013), D. Kingma and M. Welling [pdf]

- Building high-level features using large scale unsupervised learning (2013), Q. Le et al. [pdf]

Convolutional Neural Network Models

- Rethinking the inception architecture for computer vision (2016), C. Szegedy et al. [pdf]

- Inception-v4, inception-resnet and the impact of residual connections on learning (2016), C. Szegedy et al. [pdf]

- Identity Mappings in Deep Residual Networks (2016), K. He et al. [pdf]

- Deep residual learning for image recognition (2016), K. He et al. [pdf]

- Spatial transformer network (2015), M. Jaderberg et al., [pdf]

- Going deeper with convolutions (2015), C. Szegedy et al. [pdf]

- Very deep convolutional networks for large-scale image recognition (2014), K. Simonyan and A. Zisserman [pdf]

- Return of the devil in the details: delving deep into convolutional nets (2014), K. Chatfield et al. [pdf]

- OverFeat: Integrated recognition, localization and detection using convolutional networks (2013), P. Sermanet et al. [pdf]

- Maxout networks (2013), I. Goodfellow et al. [pdf]

- Network in network (2013), M. Lin et al. [pdf]

- ImageNet classification with deep convolutional neural networks (2012), A. Krizhevsky et al. [pdf]

Image: Segmentation / Object Detection

- You only look once: Unified, real-time object detection (2016), J. Redmon et al. [pdf]

- Fully convolutional networks for semantic segmentation (2015), J. Long et al. [pdf]

- Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks (2015), S. Ren et al. [pdf]

- Fast R-CNN (2015), R. Girshick [pdf]

- Rich feature hierarchies for accurate object detection and semantic segmentation (2014), R. Girshick et al. [pdf]

- Spatial pyramid pooling in deep convolutional networks for visual recognition (2014), K. He et al. [pdf]

- Semantic image segmentation with deep convolutional nets and fully connected CRFs, L. Chen et al. [pdf]

- Learning hierarchical features for scene labeling (2013), C. Farabet et al. [pdf]

Image / Video / Etc

- Image Super-Resolution Using Deep Convolutional Networks (2016), C. Dong et al. [pdf]

- A neural algorithm of artistic style (2015), L. Gatys et al. [pdf]

- Deep visual-semantic alignments for generating image descriptions (2015), A. Karpathy and L. Fei-Fei [pdf]

- Show, attend and tell: Neural image caption generation with visual attention (2015), K. Xu et al. [pdf]

- Show and tell: A neural image caption generator (2015), O. Vinyals et al. [pdf]

- Long-term recurrent convolutional networks for visual recognition and description (2015), J. Donahue et al. [pdf]

- VQA: Visual question answering (2015), S. Antol et al. [pdf]

- DeepFace: Closing the gap to human-level performance in face verification (2014), Y. Taigman et al. [pdf]:

- Large-scale video classification with convolutional neural networks (2014), A. Karpathy et al. [pdf]

- Two-stream convolutional networks for action recognition in videos (2014), K. Simonyan et al. [pdf]

- 3D convolutional neural networks for human action recognition (2013), S. Ji et al. [pdf]

- You only look once: Unified, real-time object detection (2016), J. Redmon et al. [pdf]

- Fully convolutional networks for semantic segmentation (2015), J. Long et al. [pdf]

- Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks (2015), S. Ren et al. [pdf]

- Fast R-CNN (2015), R. Girshick [pdf]

- Rich feature hierarchies for accurate object detection and semantic segmentation (2014), R. Girshick et al. [pdf]

- Spatial pyramid pooling in deep convolutional networks for visual recognition (2014), K. He et al. [pdf]

- Semantic image segmentation with deep convolutional nets and fully connected CRFs, L. Chen et al. [pdf]

- Learning hierarchical features for scene labeling (2013), C. Farabet et al. [pdf]

Image / Video / Etc

- Image Super-Resolution Using Deep Convolutional Networks (2016), C. Dong et al. [pdf]

- A neural algorithm of artistic style (2015), L. Gatys et al. [pdf]

- Deep visual-semantic alignments for generating image descriptions (2015), A. Karpathy and L. Fei-Fei [pdf]

- Show, attend and tell: Neural image caption generation with visual attention (2015), K. Xu et al. [pdf]

- Show and tell: A neural image caption generator (2015), O. Vinyals et al. [pdf]

- Long-term recurrent convolutional networks for visual recognition and description (2015), J. Donahue et al. [pdf]

- VQA: Visual question answering (2015), S. Antol et al. [pdf]

- DeepFace: Closing the gap to human-level performance in face verification (2014), Y. Taigman et al. [pdf]:

- Large-scale video classification with convolutional neural networks (2014), A. Karpathy et al. [pdf]

- Two-stream convolutional networks for action recognition in videos (2014), K. Simonyan et al. [pdf]

- 3D convolutional neural networks for human action recognition (2013), S. Ji et al. [pdf]

Natural Language Processing / RNNs

- Neural Architectures for Named Entity Recognition (2016), G. Lample et al. [pdf]

- Exploring the limits of language modeling (2016), R. Jozefowicz et al. [pdf]

- Teaching machines to read and comprehend (2015), K. Hermann et al. [pdf]

- Effective approaches to attention-based neural machine translation (2015), M. Luong et al. [pdf]

- Conditional random fields as recurrent neural networks (2015), S. Zheng and S. Jayasumana. [pdf]

- Memory networks (2014), J. Weston et al. [pdf]

- Neural turing machines (2014), A. Graves et al. [pdf]

- Neural machine translation by jointly learning to align and translate (2014), D. Bahdanau et al. [pdf]

- Sequence to sequence learning with neural networks (2014), I. Sutskever et al. [pdf]

- Learning phrase representations using RNN encoder-decoder for statistical machine translation (2014), K. Cho et al. [pdf]

- A convolutional neural network for modeling sentences (2014), N. Kalchbrenner et al. [pdf]

- Convolutional neural networks for sentence classification (2014), Y. Kim [pdf]

- Glove: Global vectors for word representation (2014), J. Pennington et al. [pdf]

- Distributed representations of sentences and documents (2014), Q. Le and T. Mikolov [pdf]

- Distributed representations of words and phrases and their compositionality (2013), T. Mikolov et al. [pdf]

- Efficient estimation of word representations in vector space (2013), T. Mikolov et al. [pdf]

- Recursive deep models for semantic compositionality over a sentiment treebank (2013), R. Socher et al. [pdf]

- Generating sequences with recurrent neural networks (2013), A. Graves. [pdf]

Speech / Other Domain

- End-to-end attention-based large vocabulary speech recognition (2016), D. Bahdanau et al. [pdf]

- Deep speech 2: End-to-end speech recognition in English and Mandarin (2015), D. Amodei et al. [pdf]

- Speech recognition with deep recurrent neural networks (2013), A. Graves [pdf]

- Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups (2012), G. Hinton et al. [pdf]

- Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition (2012) G. Dahl et al. [pdf]

- Acoustic modeling using deep belief networks (2012), A. Mohamed et al. [pdf]

Reinforcement Learning / Robotics

- End-to-end training of deep visuomotor policies (2016), S. Levine et al. [pdf]

- Learning Hand-Eye Coordination for Robotic Grasping with Deep Learning and Large-Scale Data Collection (2016), S. Levine et al. [pdf]

- Asynchronous methods for deep reinforcement learning (2016), V. Mnih et al. [pdf]

- Deep Reinforcement Learning with Double Q-Learning (2016), H. Hasselt et al. [pdf]

- Mastering the game of Go with deep neural networks and tree search (2016), D. Silver et al. [pdf]

- Continuous control with deep reinforcement learning (2015), T. Lillicrap et al. [pdf]

- Human-level control through deep reinforcement learning (2015), V. Mnih et al. [pdf]

- Deep learning for detecting robotic grasps (2015), I. Lenz et al. [pdf]

- Playing atari with deep reinforcement learning (2013), V. Mnih et al. [pdf])

More Papers from 2016

- Layer Normalization (2016), J. Ba et al. [pdf]

- Learning to learn by gradient descent by gradient descent (2016), M. Andrychowicz et al. [pdf]

- Domain-adversarial training of neural networks (2016), Y. Ganin et al. [pdf]

- WaveNet: A Generative Model for Raw Audio (2016), A. Oord et al. [pdf] [web]

- Colorful image colorization (2016), R. Zhang et al. [pdf]

- Generative visual manipulation on the natural image manifold (2016), J. Zhu et al. [pdf]

- Texture networks: Feed-forward synthesis of textures and stylized images (2016), D Ulyanov et al. [pdf]

- SSD: Single shot multibox detector (2016), W. Liu et al. [pdf]

- SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 1MB model size (2016), F. Iandola et al. [pdf]

- Eie: Efficient inference engine on compressed deep neural network (2016), S. Han et al. [pdf]

- Binarized neural networks: Training deep neural networks with weights and activations constrained to+ 1 or-1 (2016), M. Courbariaux et al. [pdf]

- Dynamic memory networks for visual and textual question answering (2016), C. Xiong et al. [pdf]

- Stacked attention networks for image question answering (2016), Z. Yang et al. [pdf]

- Hybrid computing using a neural network with dynamic external memory (2016), A. Graves et al. [pdf]

- Google’s neural machine translation system: Bridging the gap between human and machine translation (2016), Y. Wu et al. [pdf]

New papers

Newly published papers (< 6 months) which are worth reading

- MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications (2017), Andrew G. Howard et al. [pdf]

- Convolutional Sequence to Sequence Learning (2017), Jonas Gehring et al. [pdf]

- A Knowledge-Grounded Neural Conversation Model (2017), Marjan Ghazvininejad et al. [pdf]

- Accurate, Large Minibatch SGD:Training ImageNet in 1 Hour (2017), Priya Goyal et al. [pdf]

- TACOTRON: Towards end-to-end speech synthesis (2017), Y. Wang et al. [pdf]

- Deep Photo Style Transfer (2017), F. Luan et al. [pdf]

- Evolution Strategies as a Scalable Alternative to Reinforcement Learning (2017), T. Salimans et al. [pdf]

- Deformable Convolutional Networks (2017), J. Dai et al. [pdf]

- Mask R-CNN (2017), K. He et al. [pdf]

- Learning to discover cross-domain relations with generative adversarial networks (2017), T. Kim et al. [pdf]

- Deep voice: Real-time neural text-to-speech (2017), S. Arik et al., [pdf]

- PixelNet: Representation of the pixels, by the pixels, and for the pixels (2017), A. Bansal et al. [pdf]

- Batch renormalization: Towards reducing minibatch dependence in batch-normalized models (2017), S. Ioffe. [pdf]

- Wasserstein GAN (2017), M. Arjovsky et al. [pdf]

- Understanding deep learning requires rethinking generalization (2017), C. Zhang et al. [pdf]

- Least squares generative adversarial networks (2016), X. Mao et al. [pdf]

>Old Papers

Classic papers published before 2012

- An analysis of single-layer networks in unsupervised feature learning (2011), A. Coates et al. [pdf]

- Deep sparse rectifier neural networks (2011), X. Glorot et al. [pdf]

- Natural language processing (almost) from scratch (2011), R. Collobert et al. [pdf]

- Recurrent neural network based language model (2010), T. Mikolov et al. [pdf]

- Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion (2010), P. Vincent et al. [pdf]

- Learning mid-level features for recognition (2010), Y. Boureau [pdf]

- A practical guide to training restricted boltzmann machines (2010), G. Hinton [pdf]

- Understanding the difficulty of training deep feedforward neural networks (2010), X. Glorot and Y. Bengio [pdf]

- Why does unsupervised pre-training help deep learning (2010), D. Erhan et al. [pdf]

- Learning deep architectures for AI (2009), Y. Bengio. [pdf]

- Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations (2009), H. Lee et al. [pdf]

- Greedy layer-wise training of deep networks (2007), Y. Bengio et al. [pdf]

- Reducing the dimensionality of data with neural networks, G. Hinton and R. Salakhutdinov. [pdf]

- A fast learning algorithm for deep belief nets (2006), G. Hinton et al. [pdf]

- Gradient-based learning applied to document recognition (1998), Y. LeCun et al. [pdf]

- Long short-term memory (1997), S. Hochreiter and J. Schmidhuber. [pdf]

HW / SW / Dataset

- SQuAD: 100,000+ Questions for Machine Comprehension of Text (2016), Rajpurkar et al. [pdf]

- OpenAI gym (2016), G. Brockman et al. [pdf]

- TensorFlow: Large-scale machine learning on heterogeneous distributed systems (2016), M. Abadi et al. [pdf]

- Theano: A Python framework for fast computation of mathematical expressions, R. Al-Rfou et al.

- Torch7: A matlab-like environment for machine learning, R. Collobert et al. [pdf]

- MatConvNet: Convolutional neural networks for matlab (2015), A. Vedaldi and K. Lenc [pdf]

- Imagenet large scale visual recognition challenge (2015), O. Russakovsky et al. [pdf]

- Caffe: Convolutional architecture for fast feature embedding (2014), Y. Jia et al. [pdf]

Book / Survey / Review

- On the Origin of Deep Learning (2017), H. Wang and Bhiksha Raj. [pdf]

- Deep Reinforcement Learning: An Overview (2017), Y. Li, [pdf]

- Neural Machine Translation and Sequence-to-sequence Models(2017): A Tutorial, G. Neubig. [pdf]

- Neural Network and Deep Learning (Book, Jan 2017), Michael Nielsen. [html]

- Deep learning (Book, 2016), Goodfellow et al. [html]

- LSTM: A search space odyssey (2016), K. Greff et al. [pdf]

- Tutorial on Variational Autoencoders (2016), C. Doersch. [pdf]

- Deep learning (2015), Y. LeCun, Y. Bengio and G. Hinton [pdf]

- Deep learning in neural networks: An overview (2015), J. Schmidhuber [pdf]

- Representation learning: A review and new perspectives (2013), Y. Bengio et al. [pdf]

Video Lectures / Tutorials / Blogs

(Lectures)

- CS231n, Convolutional Neural Networks for Visual Recognition, Stanford University [web]

- CS224d, Deep Learning for Natural Language Processing, Stanford University [web]

- Oxford Deep NLP 2017, Deep Learning for Natural Language Processing, University of Oxford [web]

(Tutorials)

- NIPS 2016 Tutorials, Long Beach [web]

- ICML 2016 Tutorials, New York City [web]

- ICLR 2016 Videos, San Juan [web]

- Deep Learning Summer School 2016, Montreal [web]

- Bay Area Deep Learning School 2016, Stanford [web]

(Blogs)

- OpenAI [web]

- Distill [web]

- Andrej Karpathy Blog [web]

- Colah’s Blog [Web]

- WildML [Web]

- FastML [web]

- TheMorningPaper [web]

Appendix: More than Top 100

(2016)

- A character-level decoder without explicit segmentation for neural machine translation (2016), J. Chung et al. [pdf]

- Dermatologist-level classification of skin cancer with deep neural networks (2017), A. Esteva et al. [html]

- Weakly supervised object localization with multi-fold multiple instance learning (2017), R. Gokberk et al. [pdf]

- Brain tumor segmentation with deep neural networks (2017), M. Havaei et al. [pdf]

- Professor Forcing: A New Algorithm for Training Recurrent Networks (2016), A. Lamb et al. [pdf]

- Adversarially learned inference (2016), V. Dumoulin et al. [web][pdf]

- Understanding convolutional neural networks (2016), J. Koushik [pdf]

- Taking the human out of the loop: A review of bayesian optimization (2016), B. Shahriari et al. [pdf]

- Adaptive computation time for recurrent neural networks (2016), A. Graves [pdf]

- Densely connected convolutional networks (2016), G. Huang et al. [pdf]

- Region-based convolutional networks for accurate object detection and segmentation (2016), R. Girshick et al.

- Continuous deep q-learning with model-based acceleration (2016), S. Gu et al. [pdf]

- A thorough examination of the cnn/daily mail reading comprehension task (2016), D. Chen et al. [pdf]

- Achieving open vocabulary neural machine translation with hybrid word-character models, M. Luong and C. Manning. [pdf]

- Very Deep Convolutional Networks for Natural Language Processing (2016), A. Conneau et al. [pdf]

- Bag of tricks for efficient text classification (2016), A. Joulin et al. [pdf]

- Efficient piecewise training of deep structured models for semantic segmentation (2016), G. Lin et al. [pdf]

- Learning to compose neural networks for question answering (2016), J. Andreas et al. [pdf]

- Perceptual losses for real-time style transfer and super-resolution (2016), J. Johnson et al. [pdf]

- Reading text in the wild with convolutional neural networks (2016), M. Jaderberg et al. [pdf]

- What makes for effective detection proposals? (2016), J. Hosang et al. [pdf]

- Inside-outside net: Detecting objects in context with skip pooling and recurrent neural networks (2016), S. Bell et al. [pdf].

- Instance-aware semantic segmentation via multi-task network cascades (2016), J. Dai et al. [pdf]

- Conditional image generation with pixelcnn decoders (2016), A. van den Oord et al. [pdf]

- Deep networks with stochastic depth (2016), G. Huang et al., [pdf]

- Consistency and Fluctuations For Stochastic Gradient Langevin Dynamics (2016), Yee Whye Teh et al. [pdf]

(2015)

- Ask your neurons: A neural-based approach to answering questions about images (2015), M. Malinowski et al. [pdf]

- Exploring models and data for image question answering (2015), M. Ren et al. [pdf]

- Are you talking to a machine? dataset and methods for multilingual image question (2015), H. Gao et al. [pdf]

- Mind’s eye: A recurrent visual representation for image caption generation (2015), X. Chen and C. Zitnick. [pdf]

- From captions to visual concepts and back (2015), H. Fang et al. [pdf].

- Towards AI-complete question answering: A set of prerequisite toy tasks (2015), J. Weston et al. [pdf]

- Ask me anything: Dynamic memory networks for natural language processing (2015), A. Kumar et al. [pdf]

- Unsupervised learning of video representations using LSTMs (2015), N. Srivastava et al. [pdf]

- Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding (2015), S. Han et al. [pdf]

- Improved semantic representations from tree-structured long short-term memory networks (2015), K. Tai et al. [pdf]

- Character-aware neural language models (2015), Y. Kim et al. [pdf]

- Grammar as a foreign language (2015), O. Vinyals et al. [pdf]

- Trust Region Policy Optimization (2015), J. Schulman et al. [pdf]

- Beyond short snippents: Deep networks for video classification (2015) [pdf]

- Learning Deconvolution Network for Semantic Segmentation (2015), H. Noh et al. [pdf]

- Learning spatiotemporal features with 3d convolutional networks (2015), D. Tran et al. [pdf]

- Understanding neural networks through deep visualization (2015), J. Yosinski et al. [pdf]

- An Empirical Exploration of Recurrent Network Architectures (2015), R. Jozefowicz et al. [pdf]

- Deep generative image models using a laplacian pyramid of adversarial networks (2015), E.Denton et al. [pdf]

- Gated Feedback Recurrent Neural Networks (2015), J. Chung et al. [pdf]

- Fast and accurate deep network learning by exponential linear units (ELUS) (2015), D. Clevert et al. [pdf]

- Pointer networks (2015), O. Vinyals et al. [pdf]

- Visualizing and Understanding Recurrent Networks (2015), A. Karpathy et al. [pdf]

- Attention-based models for speech recognition (2015), J. Chorowski et al. [pdf]

- End-to-end memory networks (2015), S. Sukbaatar et al. [pdf]

- Describing videos by exploiting temporal structure (2015), L. Yao et al. [pdf]

- A neural conversational model (2015), O. Vinyals and Q. Le. [pdf]

- Improving distributional similarity with lessons learned from word embeddings, O. Levy et al. [[pdf]] (https://www.transacl.org/ojs/index.php/tacl/article/download/570/124)

- Transition-Based Dependency Parsing with Stack Long Short-Term Memory (2015), C. Dyer et al. [pdf]

- Improved Transition-Based Parsing by Modeling Characters instead of Words with LSTMs (2015), M. Ballesteros et al. [pdf]

- Finding function in form: Compositional character models for open vocabulary word representation (2015), W. Ling et al. [pdf]

(~2014)

- DeepPose: Human pose estimation via deep neural networks (2014), A. Toshev and C. Szegedy [pdf]

- Learning a Deep Convolutional Network for Image Super-Resolution (2014, C. Dong et al. [pdf]

- Recurrent models of visual attention (2014), V. Mnih et al. [pdf]

- Empirical evaluation of gated recurrent neural networks on sequence modeling (2014), J. Chung et al. [pdf]

- Addressing the rare word problem in neural machine translation (2014), M. Luong et al. [pdf]

- On the properties of neural machine translation: Encoder-decoder approaches (2014), K. Cho et. al.

- Recurrent neural network regularization (2014), W. Zaremba et al. [pdf]

- Intriguing properties of neural networks (2014), C. Szegedy et al. [pdf]

- Towards end-to-end speech recognition with recurrent neural networks (2014), A. Graves and N. Jaitly. [pdf]

- Scalable object detection using deep neural networks (2014), D. Erhan et al. [pdf]

- On the importance of initialization and momentum in deep learning (2013), I. Sutskever et al. [pdf]

- Regularization of neural networks using dropconnect (2013), L. Wan et al. [pdf]

- Learning Hierarchical Features for Scene Labeling (2013), C. Farabet et al. [pdf]

- Linguistic Regularities in Continuous Space Word Representations (2013), T. Mikolov et al. [pdf]

- Large scale distributed deep networks (2012), J. Dean et al. [pdf]

- A Fast and Accurate Dependency Parser using Neural Networks. Chen and Manning. [pdf]

Runtime 3D Asset Delivery

Sketchfab

Facebook получила поддержку 3D-файлов формата glTF 2.0 и новые способы распространения 3D-контента

glTF 2.0 и OpenGEX

glTF-Sample-Models

glTF Tutorial This tutorial gives an introduction to glTF, the GL transmission format. It summarizes the most important features and application cases of glTF, and describes the structure of the files that are related to glTF. It explains how glTF assets may be read, processed, and used to display 3D graphics efficiently.

glTF-CSharp-Loader

glTF – Runtime 3D Asset Delivery

glTF™ (GL Transmission Format) is a royalty-free specification for the efficient transmission and loading of 3D scenes and models by applications. glTF minimizes both the size of 3D assets, and the runtime processing needed to unpack and use those assets. glTF defines an extensible, common publishing format for 3D content tools and services that streamlines authoring workflows and enables interoperable use of content across the industry.

Runtime GLTF Loader for Unity3D

glTF-Blender-IO

SYCL C++ Single-source Heterogeneous Programming for OpenCL

Khronos SYCL Registry

SyclParallelSTL Open Source Parallel STL implementation

SYCL

SYCL WiKi

TensorFlow™ AMD Setup Guide

TensorFlow 1.x On Ubuntu 16.04 LTS

AMDGPU-PRO Driver for Linux

Codeplay Announces World’s First Fully-Conformant SYCL 1.2.1 Solution Posted on August 23, 2018

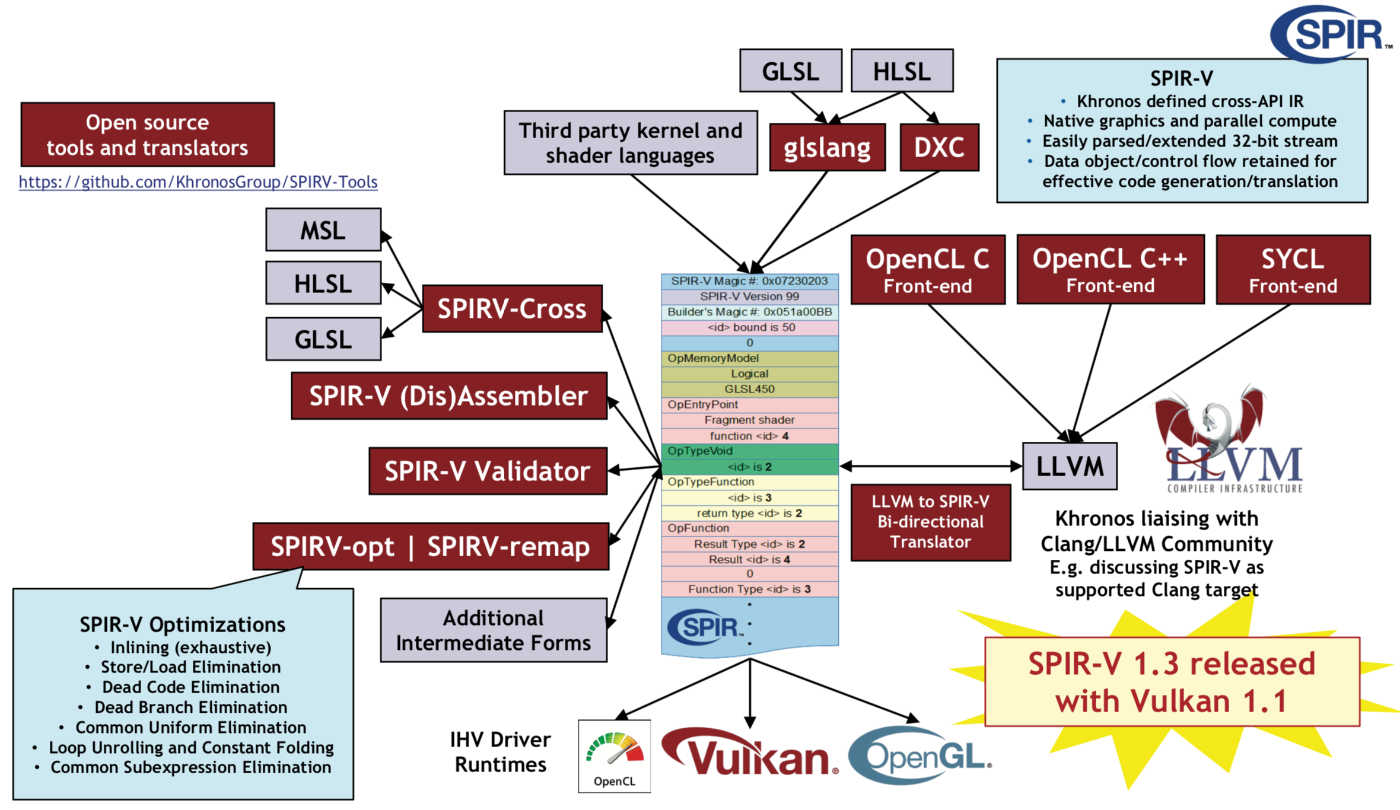

SPIR The Industry Open Standard Intermediate Language for Parallel Compute and Graphics

SPIRV-Cross is a practical tool and library for performing reflection on SPIR-V and disassembling SPIR-V back to high level languages

A short OpenGL / SPIRV example

Khronos Vulkan

Vulkan NVIDIA

Eigen is a C++ template library for linear algebra: matrices, vectors, numerical solvers, and related algorithms.

An implementation of BLAS using the SYCL open standard for acceleration on OpenCL devices

Tensorflow

ComputeCpp is Conformant with SYCL 1.2.1!

triSYCL

Xilinx FPGAs: The Chip Behind Alibaba

Optimizing the Convolution Operation to Accelerate Deep Neural Networks on FPGA

Neural Network Exchange Format

Neural Network Exchange Format (NNEF)

The NNEF Tools repository contains tools to generate and consume NNEF documents

The LLVM Compiler Infrastructure

Getting Started with the LLVM System

LLVM Download Page

Clang: a C language family frontend for LLVM

LLVM 8.0.0 Release Notes

Clang 7 documentation

Clang (произносится «клэнг») является фронтендом для языков программирования C, C++, Objective-C, Objective-C++ и OpenCL C, использующимся совместно с фреймворком LLVM. Clang транслирует исходные коды в байт-код LLVM, затем фреймворк производит оптимизации и кодогенерацию. Целью проекта является создание замены GNU Compiler Collection (GCC). Разработка ведётся согласно концепции open source в рамках проекта LLVM. В проекте участвуют работники нескольких корпораций, в том числе Google и Apple. Исходный код доступен на условиях BSD-подобной лицензии.

Clang. Часть 1: введение

Как приручить дракона. Краткий пример на clang-c

clang и IDE

Clang API. Начало

Numba makes Python code fast Numba is an open source JIT compiler that translates a subset of Python and NumPy code into fast machine code.

Numba for AMD ROC GPUs

Изучение сознания в когнитивной психологии — Иван Иванчей

Тест AMD Ryzen 5 2600

Результаты тестирования AMD Ryzen 7 1700X

Рейтинг процессоров

Tensorflow Inception v3 benchmark

low performance ?

Hardware to Play ROCm

ROCm Software Platform

Deep Learning on ROCm ROCm Tensorflow Release

HIP : Convert CUDA to Portable C++ Code

RadeonOpenCompute/ROCm ROCm – Open Source Platform for HPC and Ultrascale GPU Computing https://rocm.github.io/

ROCmSoftwarePlatform pytorch

ROCmSoftwarePlatform/MIOpen

Welcome to MIOpen Advanced Micro Devices, Inc’s open source deep learning library.

AMD ROCm GPUs now support TensorFlow v1.8, a major milestone for AMD’s deep learning plans

ROCm-Developer-Tools/HIP HIP : Convert CUDA to Portable C++ Code

hipCaffe Quickstart Guide Install ROCm

Half-precision floating point library

convnet-benchmarks

computeruniverse.ru VEGA

Deep Learning on ROCm Announcing our new Foundation for Deep Learning acceleration MIOpen 1.0 which introduces support for Convolution Neural Network (CNN) acceleration — built to run on top of the ROCm software stack!

MXNet is a deep learning framework that has been ported to the HIP port of MXNet. It works both on HIP/ROCm and HIP/CUDA platforms. Mxnet makes use of rocBLAS,rocRAND,hcFFT and MIOpen APIs.

ROCmSoftwarePlatform/hipCaffe

ROCm Software Platform

«Radeon Open Compute (ROCm) — это новая эра для платформ расчета на GPU, призванных использовать возможности ПО с открытым исходным кодом, чтобы реализовать новые решения для высокопроизводительных и гипермасштабируемых вычислений. ПО ROCm дает разработчикам абсолютную гибкость в том, где и как они могут использовать GPU-вычисления.

MIOpen

A Comparison of Deep Learning Frameworks

UserBenchmark

UserBenchmark AMD-Ryzen-TR-2990WX-vs-Intel-Core-i9-7960X

UserBenchmark AMD-Ryzen-7-2700X-vs-Intel-Core-i7-8700K

UserBenchmark AMD-Ryzen-7-2700X-vs-AMD-Ryzen-7-1800X

UserBenchmark AMD-Ryzen-7-2700X-vs-Intel-Core-i7-4790K

UserBenchmark Intel-Core-i7-4790K-vs-Intel-Core-i5-4690

UserBenchmark Intel-Core-i5-4670K-vs-Intel-Core-i7-4790K

UserBenchmark AMD-Ryzen-7-2700X-vs-Intel-Core-i7-3770K

UserBenchmark Intel-Core-i5-3570-vs-AMD-Ryzen-7-2700X

UserBenchmark Intel-Core-i5-3570-vs-Intel-Core-i7-3770K

UserBenchmark Intel-Core-i5-3570-vs-Intel-Core-i5-4690

UserBenchmark Intel-Core-i5-3570-vs-Intel-Core-i5-4670K

UserBenchmark Intel-Core-i5-3570-vs-Intel-Core-i7-4790K

Сравнение Google TPUv2 и Nvidia V100 на ResNet-50

AI accelerator

eSilicon deep learning ASIC in production qualification

Бенчмарк нового тензорного процессора Google для глубинного обучения

Специализированный ASIC от Google для машинного обучения в десятки раз быстрее GPU

В MIT разработали фотонный чип для глубокого обучения

Machine Learning Series

Visual Computing Group

source{d} tech talks – Machine Learning 2017

10 Alarming Predictions for Deep Learning in 2018

Эксперименты с malloc и нейронными сетями

LSTMVis: A Tool for Visual Analysis of Hidden State Dynamics in Recurrent Neural Networks

HiPiler: Visual Exploration Of Large Genome Interaction Matrices With Interactive Small Multiples

Deep Learning Hardware Limbo

OpenAI

Inside OpenAI

Math Deep learning

Facebook and Microsoft introduce new open ecosystem for interchangeable AI frameworks

Inside AI Next-level computing powered by Intel AI Intel® Nervana™ Neural Network Processor

Intel® Nervana™ Neural Network Processor: Architecture Update Dec 06, 2017

AI News January 2018

Andrej Karpathy

MIT 6.S094: Deep Reinforcement Learning for Motion Planning

RI Seminar: Sergey Levine : Deep Robotic Learning

Tim Lillicrap – Data efficient deep reinforcement learning for continuous control

Intermediate Python

Tensors and Dynamic neural networks in Python with strong GPU acceleration http://pytorch.org

Tutorial for beginners https://github.com/GunhoChoi/Kind-PyTorch-Tutorial

A set of examples around pytorch in Vision, Text, Reinforcement Learning, etc.

PyTorch documentation

Transfering a model from PyTorch to Caffe2 and Mobile using ONNX

ONNX is a new open ecosystem for interchangeable AI models.

Open Neural Network Exchange https://onnx.ai/

Intermediate Python Docs

K-Means Clustering in Python

In Depth: k-Means Clustering

K-means Clustering in Python

Clustering With K-Means in Python

Unsupervised Machine Learning: Flat Clustering K-Means clusternig example with Python and Scikit-learn

ST at CES 2018 – Deep Learning on STM32

Конец эпохи Nvidia? Graphcore разработала чипы на базе вычислительных графов

Как выбрать графический процессор для глубокого обучения

Почему TPU так хорошо подходят для глубинного обучения?

Конфигурация компьютера для машинного обучения. Бюджетный и оптимальный подбор

Десять алгоритмов машинного обучения, которые вам нужно знать

С Новым машиннообучательным годом!

Лучшие видеокарты и оборудование для майнинга Ethereum

SIGGRAPH 2017 ML модель расчета динамики жидкости без прямого моделирования гидродинамики

Windows Machine Learning — WinML

Активность мозга человека впервые транслировали в чёткую речь

Что такое современный Искусственный интеллект Роман Никифоров

Алгоритмы на Python 3. Лекция №1